OpenAI has dropped an AI bombshell few days back with it's new text-to-video generating model named Sora. Let's dive in to see what does Sora offers.

Long story shot Sora is an AI model which can create realistic imaginative scenes from text instructions you read it right It's a "Text to Video Generation model"

Prompt: A cartoon kangaroo disco dances.

What is Sora?

Sora, which means Sky in Japanese and Red rose in Persian is a text-to-video model capable of creating a minute-long videos which is hard to differentiate whether it is created by AI or Professionals

Sora can create videos of up to 60 seconds featuring highly detailed scenes, complex camera motions with vibrant emotions.OpenAI claims that it can generate realistic video using still images or footage videos.

How does it work?

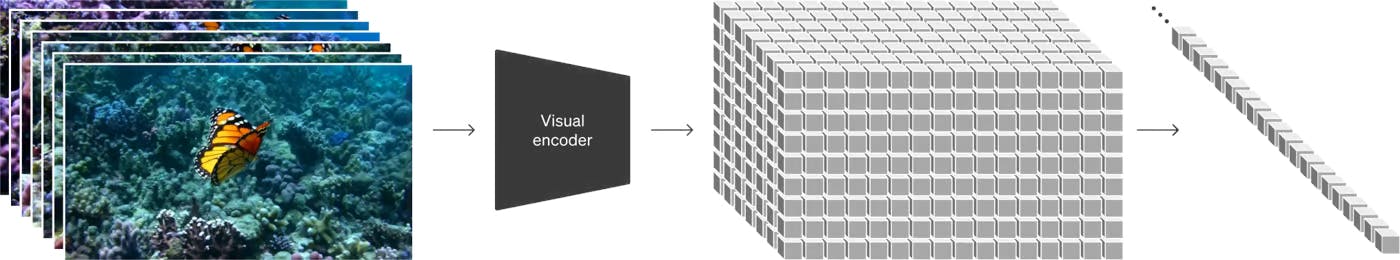

Imagine starting your old TV where it shows a noisy picture with grains and slowly removing the grains and fuzziness until you see a clear moving video. That's what Sora does with it's special "Transform Architecture" to gradually remove noise and create videos.

Sora can generate videos at once rather than frame by frame. By feeding the model text descriptors, users can guide the video's content like making sure a person is stays visible even if they move off-screen for a moment.

Think of GPT models which generate text based on words. Sora does something similar, but with images and videos.

It breaks down the video as patches allowing it to handle diverse data sets with varying durations, resolutions and aspect ratios improving it's ability to generate high-quality outputs.

Sora uses recaptioning techniques from DALL-E 3, which involves generating highly generative captions for the visual training data. As a result the model is able to follow the user's text instructions in the generated video.

Limitations of Sora

Open AI has mentioned in their blog post about the weakness of Sora that is

Stimulating the physics of a complex space (E.g: The person might have a bite in a cookie but the bite mark won't be there in the cookie)

In this example, Sora fails to model the chair as a rigid object, leading to inaccurate physical interactions.

Wanna try it!

Sora is in test phase with a team of experts know as Red-team which simulates real-world use to identify vulnerabilities and weakness in the system.

The OpenAI team is granting access to a number of visual artists,designers, and film makers to gain feedback on how to advance this model to be useful for professionals.

So it might take some days to be available for Public use.

Learn more: https://openai.com/sora

That's all about Sora. Will catch up with new stuffs. Till then Happy Learning !!!